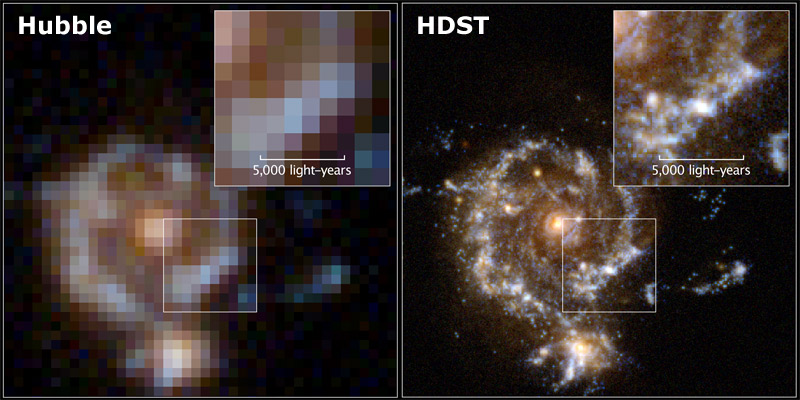

The High-Definition Space Telescope (HDST) is operational

The High-Definition Space Telescope (HDST) is a major new space observatory that is placed at Sun-Earth Lagrange point 2, orbiting the Sun about a million miles from Earth. It was proposed in 2015 by the Association of Universities for Research in Astronomy (AURU), the organisation running Hubble and other telescopes on behalf of NASA. Reviewed by the National Academy of Sciences in 2020 and subsequently approved by Congress, the HDST is deployed and operational during the 2030s.* With a diameter of 11.7 metres, it is much larger than both Hubble (2.4 m) and the James Webb telescope (6.5 m).

The HDST is designed to locate dozens of Earthlike planets in our local stellar neighbourhood. It is equipped with an internal coronagraph – a disk that blocks light from the central star, making a dim planet more visible. A starshade is eventually added that can float miles out in front of it to perform the same function. Exoplanets are imaged in direct visible light, as well as being spectroscopically analysed to determine their atmospheres and confirm the presence of water, oxygen, methane, and other organic compounds.

Tens of thousands of exoplanets have been catalogued since Kepler and other missions of the previous decades. With attention now focused on the most promising candidates for biosignatures, the possibility of detecting the first signs of alien life is greatly increased during this time.

The HDST is 100 times more sensitive than Hubble. Peering into the deep universe, it can resolve objects only 300 light years in diameter, located at distances of 10 billion light years – the nucleus of a small galaxy, for example, or a gas cloud on the way to forming a new star system.* It can study extremely faint objects, up to 20 times dimmer than anything that can be seen from large, ground-based telescopes.

The UV sensitivity of the HDST can be used to map the distribution of hot gases lying outside the perimeter of galaxies. This reveals the structure of the so-called "cosmic web" that galaxies are embedded inside, and shows how chemically enriched gases flow in and out of galaxies to fuel star formation. Individual stars like our Sun can be picked out from 30 million light years away.

Closer to home, the HDST is capable of imaging many features in our own Solar System with spectacular resolution and detail, such as the icy plumes from Europa and other moons, or weather conditions on the gas giants. It can search for remote, hidden members of our Solar System in the Kuiper Belt and beyond. The total cost of the telescope is approximately $10 billion.

Image credit: D. Ceverino, C. Moody, and G. Snyder, and Z. Levay (STScI)

2030

Global population is reaching crisis point

Rapid population growth and industrial expansion is having a major impact on food, water and energy supplies. During the early 2000s, there were six billion people on Earth. By 2030, there are an additional two billion, most of them from poor countries. Humanity's footprint is such that it now requires the equivalent of two whole Earths to sustain itself in the long term. Farmland, fresh water and natural resources are becoming scarcer by the day.*

The extra one-third of human beings on the planet means that energy requirements have soared, at a time when crude oil supplies are in terminal decline. A series of conflicts has been unfolding in the Middle East, Asia and Africa, at times threatening to spill over into Europe. With America involved too, the world is teetering on the brink of a major global war.

There is the added issue of climate change, with CO2 levels reaching almost 450 parts per million. As a result, natural feedbacks are kicking in on a global scale. This is most apparent in the Arctic, where melting permafrost is now venting almost one gigatonne of carbon annually.** There are signs that a tipping point has been reached, which is manifesting itself in the form of runaway environmental degradation. Nature's ecosystems are changing at a speed and scale rarely witnessed in Earth's history. This is also adding to food shortages, crop yields falling by up to one third in some regions* and prices of some crops more than doubling,* with devastating impacts on the world's poor.

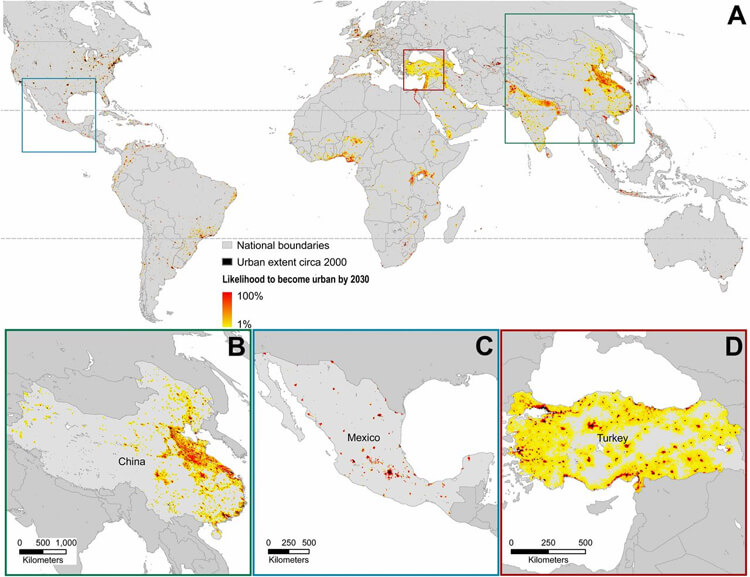

The urban population, which stood at 3.5 billion in 2010, has now surged to almost 5 billion. Resource scarcity, economic and political factors, energy costs and mounting environmental issues are forcing people into ever more crowded and high-density areas. Many cities are merging to form vast sprawling metropolises with hundreds of millions of people. In some nations, those living in urban areas make up over 90% of the population.*

By 2030, urban areas occupy an additional 463,000 sq mi (741,000 sq km) globally, relative to 2012. This is equivalent to more than 20,000 new football fields being added to the global urban area every day for the first three decades of the 21st century. Almost $30 trillion has been spent during the last two decades on transportation, utilities and other infrastructure. Some of the most substantial growth has been in China which boasts an urban population approaching one billion and has spent $100 billion annually just on its own projects. Much of the Chinese coastline has been transformed into what is essentially a giant urban corridor. Turkey is another region that has witnessed phenomenal urban development.

Global forecasts of urban expansion to 2030. Credit: Boston University's Department of Geography and Environment

All of this expansion is having a major impact on the surrounding environment. In addition to cities, new networks of road, rail and utilities have been built, crisscrossing the landscape and cutting through major wildlife zones.* What were previously protected areas are now opening up for resource exploitation and food production. Numerous species are reclassified as endangered during this period as a result of human encroachment, pollution and habitat destruction.

The accelerating magnitude of these and other problems is leading to a rapid migration from traditional fossil fuels to renewable energy. Advances in nanotechnology have resulted in greatly improved solar power. In some countries, such as Japan, photovoltaic materials are being added to almost every new building.* Energy supplies in general are becoming more localised and efficient. This transition is putting increasing strain on fossil fuel companies, since the proven reserves of oil, coal and natural gas far exceed the decided "safe" limit for what can be burned. Because most reserves had already been factored into the market value of these organisations, they now face the prospect of huge financial loss. In response, many companies are fighting tooth and nail against further regulation.*

Another issue which governments have to contend with during this time is the aging population, which has seen a doubling of retired persons since the year 2000. People are living longer, healthier lives. With state pension budgets under increasing strain, the overall effect is a decreased income for senior citizens. Retirement ages are increasing: in America, Asia and most European countries, many employees are forced to work into their 70s. Stress levels for the average person have continued to increase, as the world adapts to these various crises.

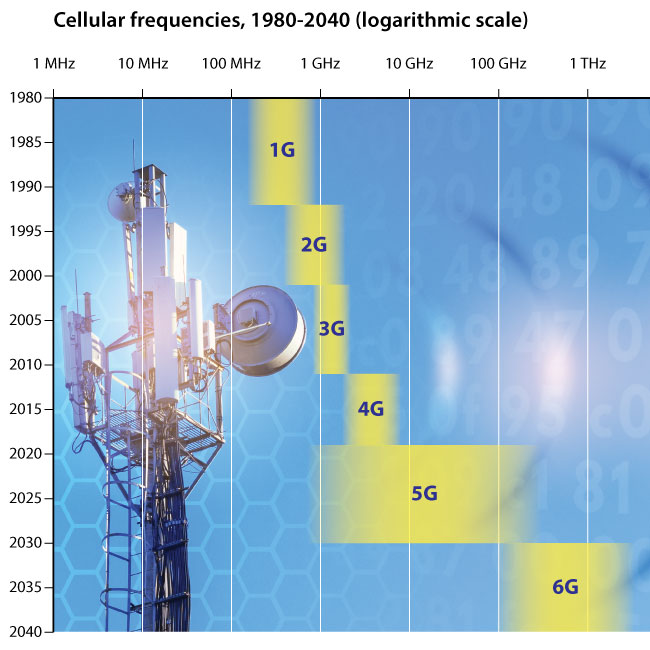

The 6G standard is released

By 2030, a new cellular network standard has emerged that offers even greater speeds than 5G. Early research on this sixth generation (6G) had started during the late 2010s when China,* the USA* and other countries investigated the potential for working at higher frequencies.

Whereas the first four mobile generations tended to operate at between several hundred or several thousand megahertz, 5G had expanded this range into the tens of thousands and hundreds of thousands. A revolutionary technology at the time, it allowed vastly improved bandwidth and lower latency. However, it was not without its problems, as exponentially growing demand for wireless data transfer put ever-increasing pressure on service providers, while even shorter latencies were required for certain specialist and emerging applications.*

This led to development of 6G, based on frequencies ranging from 100 GHz to 1 THz and beyond. A ten-fold boost in data transfer rates would mean users enjoying terabits per second (Tbit/s). Furthermore, improved network stability and latency – achieved with AI and machine learning algorithms – could be combined with even greater geographical coverage. The Internet of Things, already well-established during the 2020s, now had the potential to grow by further orders of magnitude and connect not billions, but trillions of objects.

Following a decade of research and testing, widespread adoption of 6G occurs in the 2030s. However, wireless telecommunications are now reaching a plateau in terms of progress, as it becomes extremely difficult to extend beyond the terahertz range.* These limits are eventually overcome, but require wholly new approaches and fundamental breakthroughs in physics. The idea of a seventh standard (7G) is also placed in doubt by several emerging technologies that support the existing wireless communications, making future advances iterative, rather than generational.*

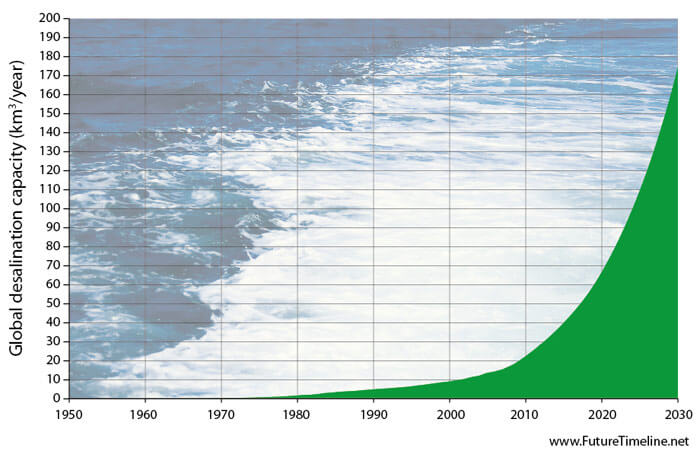

Desalination has exploded in use

A combination of increasingly severe droughts, aging infrastructure and the depletion of underground aquifers is now endangering millions of people around the world. The on-going population growth described earlier is only exacerbating this, with global freshwater supplies continually stretched to their limits. This is forcing a rapid expansion of desalination technology.

The idea of removing salt from saline water had been described as early as 320 BC.* In the late 1700s it was used by the U.S. Navy, with solar stills built into shipboard stoves. It was not until the 20th century, however, that industrial-scale desalination began to emerge, with multi-flash distillation and reverse osmosis membranes. Waste heat from fossil fuel or nuclear power plants could be used, but even then, these processes remained prohibitively expensive, inefficient and highly energy-intensive.

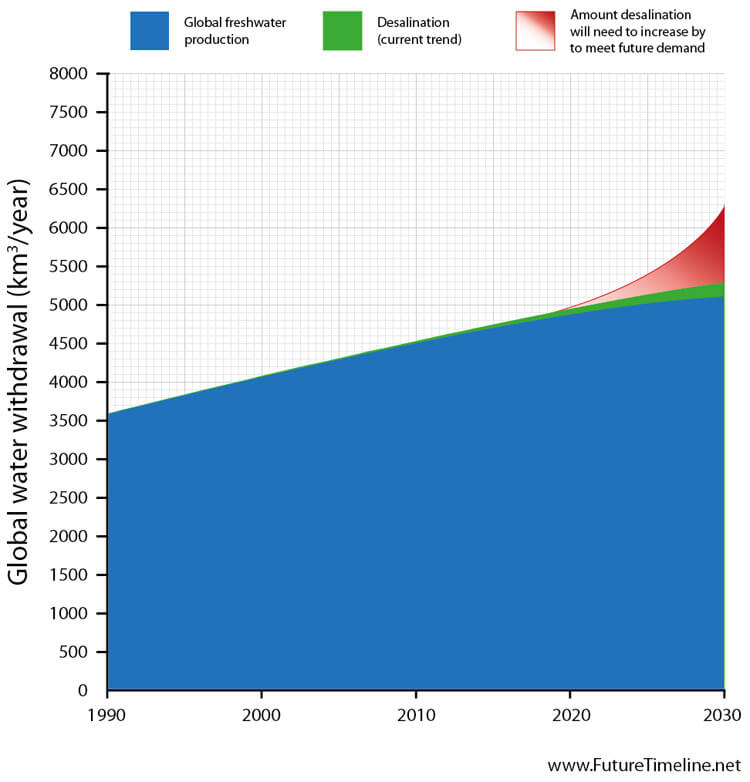

By the early 21st century, the world's demand for resources was growing exponentially. The UN estimated that humanity would require over 30 percent more water between 2012 and 2030.* Historical improvements in freshwater production efficiency were no longer able to keep pace with a ballooning population,* made worse by the effects of climate change.

New methods of desalination were seen as a possible solution to this crisis and a number of breakthroughs emerged during the 2000s and 2010s. One such technique – of particular benefit to arid regions – was the use of concentrated photovoltaic (CPV) cells to create hybrid electricity/water production. In the past, these systems had been hampered by excessive temperatures which made the cells inefficient. This issue was overcome by the development of water-filled micro-channels, capable of cooling the cells. In addition to making the cells themselves more efficient, the heated waste water could then be reused in desalination. This combined process could reduce cost and energy use, improving its practicality on a larger scale.*

Breakthroughs like this and others, driven by huge levels of investment, led to a substantial increase in desalination around the world. This trend was especially notable in the Middle East and other equatorial regions; home to both the highest concentration of solar energy and the fastest growing demand for water.

However, this exponential progress was dwarfed by the sheer volume of water required by an ever-expanding global economy, which now included the burgeoning middle classes of China and India. The world was adding an extra 80 million people each year – equivalent to the entire population of Germany.* By 2017, Yemen was in a state of emergency, with its capital almost entirely depleted of groundwater.* Significant regional instability began to affect the Middle East, North Africa and South Asia, as water resources became weapons of war.*

Amid this turmoil, even greater advances were being made in desalination. It was acknowledged that present trends in capacity – though impressive compared to earlier decades – were insufficient to satisfy global demand and therefore a major, fundamental breakthrough would be needed on a large scale.*

Nanotechnology offered just such a breakthrough. The use of graphene in the water filtration process had been demonstrated in the early 2010s.** This involved atom-thick sheets of carbon, able to separate salt from water using much lower pressure, and hence, much lower energy. This was due to the extreme precision with which the perforations in each graphene membrane could be manufactured. At only a nanometre across, each hole was the perfect size for a water molecule to fit through. An added benefit was the very high durability of graphene, potentially making desalination plants more reliable and longer-lasting.

Unfortunately, patents were secured by corporations that initially limited its wider use. A number of high-profile international lawsuits were brought, as entrepreneurs and companies attempted to develop their own versions. With a genuine crisis unfolding, this led to an eventual restructuring of intellectual property rights. By 2030, graphene-based filtration systems have closed most of the gap between supply and demand, easing the global water shortage.* However, the delayed introduction of this revolutionary technology has caused problems in many vulnerable parts of the world.

In the 2040s* and beyond, desalination will play an even more crucial role, as humanity adapts to a rapidly changing climate. Ultimately, it will become the world's primary source of freshwater, as non-renewable sources like fossil aquifers are depleted around the globe.

"Smart grid" technology is widespread in developed nations

In prior decades, the disruptive effects of energy shocks,* alongside ever-increasing demands of growing and industrialising populations, were putting strain on the world's power grids. Blackouts occurred in the worst-hit regions, with consumers becoming more and more conscious of their energy use and taking measures to either monitor and/or cut back their consumption. This already precarious situation was exacerbated by the relatively ancient infrastructure in many countries. Much of the grid at the beginning of the 21st century was extremely old and inefficient, losing more than half of its available electricity during production, transmission and usage. A convergence of business, political, social and environmental issues forced governments and regulators to finally address this problem.

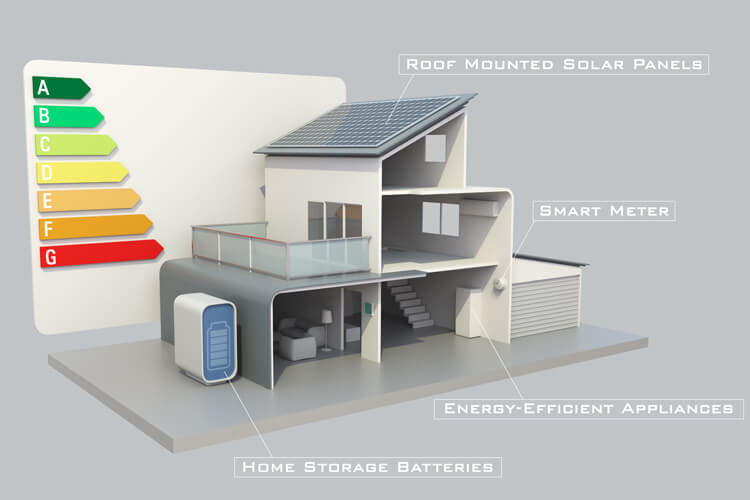

By 2030, integrated smart grids are becoming widespread in the developed world,** the main benefit of which is the optimal balancing of demand and production. Traditional power grids had previously relied on a just-in-time delivery system, where supply was manually adjusted constantly in order to match demand. Now, this problem is being eliminated due to a vast array of sensors and automated monitoring devices embedded throughout the grid. This approach had already emerged on a small scale, in the form of smart meters for individual homes and offices. By 2030, it is being scaled up to entire national grids.

Power plants now maintain constant, real-time communication with all residents and businesses. If capacity is ever strained, appliances instantly self-adjust to consume less power, even turning themselves off completely when idle and not in use. Since balancing demand and production is now achieved on a real-time, automatic basis within the grid itself, this greatly reduces the need for "peaker" plants as supplemental sources. In the event of any remaining gap, algorithms calculate the exact requirements and turn on extra generators automatically.

Computers also help adjust for and level out peaks and troughs in energy demand. Sensors in the grid can detect precisely when and where consumption is highest. Over time, production can be automatically shifted according to the predicted rise and fall in demand. Smart meters can then adjust for any discrepancies. Another benefit of this approach is allowing energy providers to raise electricity prices during periods of high consumption, helping to flatten out peaks. This makes the grid more reliable overall, since it reduces the number of variables that need to be accounted for.

Yet another advantage of the smart grid is its capacity for bidirectional flow. In the past, power transmission could only be done in one direction. Today, a proliferation of local power generation, such as photovoltaic panels and fuel cells, means that energy production is much more decentralised. Smart grids now take into account homes and businesses which can add their own surplus electricity to the system, allowing energy to be transmitted in both directions through power lines.

This trend of redistribution and localisation is also making large-scale renewables more viable, since the grid is now adaptable to the intermittent power output of solar and wind. On top of this, smart grids are also designed with multiple full load-bearing transmission routes. This way, if a broken transmission line causes a blackout, sensors instantly locate the damaged area while electricity is rerouted to the affected area. Crews no longer need to investigate multiple transformers to isolate a problem, and blackouts are reduced as a result. This also prevents any kind of domino effect from setting off a rolling blackout.

Overall, this new "internet of energy" is far more sustainable, efficient and reliable. Energy costs are reduced, while paving the way to a post-carbon economy. Countries that quickly adapt smart grids are better protected from oil shocks, while greenhouse gas emissions are reduced by almost 20 per cent in some nations.* As the shift to clean energy continues, this situation will only improve, expanding to even larger scales. Regions begin merging their grids together on a country-to-country, and eventually continent-wide, basis.*

An interstellar message arrives at Luyten's Star

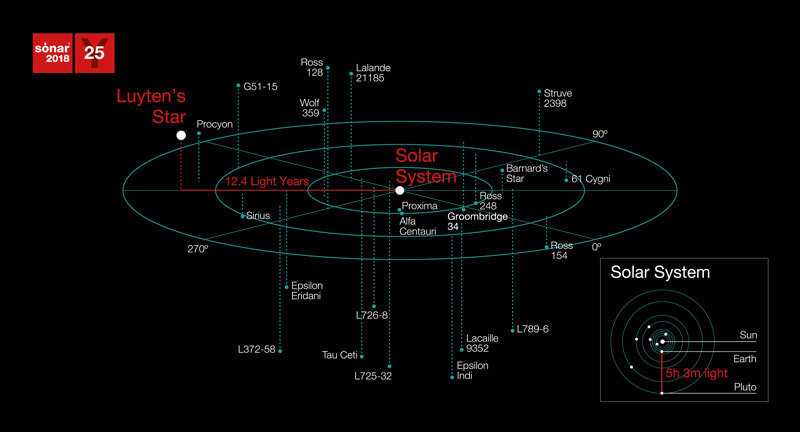

Luyten's Star (GJ 273) is a red dwarf located about 12.4 light-years from Earth. Despite its relatively close proximity, it has a visual magnitude of only 9.9, making it too faint to be seen with the naked eye. It was named after Willem Luyten, who, in collaboration with Edwin G. Ebbighausen, first determined its high proper motion in 1935. Luyten's star is one-quarter the mass of the Sun and has 35% of its radius.

In March 2017, two planets were discovered orbiting Luyten's Star. The outer planet, GJ 273b, was a "Super Earth" with 2.9 Earth masses and found to be lying in the habitable zone, with potential for liquid water on the surface. The inner planet, GJ 273c, had 1.2 Earth masses, but orbited much closer, with an orbital period of only 4.7 days.

In October 2017, a project known as "Sónar Calling GJ 273b" was initiated. This would send music through deep space in the direction of Luyten's Star in an attempt to communicate with extraterrestrial intelligence. The project – organised by Messaging Extraterrestrial Intelligence (METI) and Sónar (a music festival in Barcelona, Spain) – beamed a series of radio signals from a radar antenna at Ramfjordmoen, Norway. The first transmissions were sent on 16th, 17th and 18th October, with a second batch in April 2018.

This became the first radio message ever sent to a potentially habitable exoplanet. The message included 33 music pieces of 10 seconds each, by artists including Autechre, Jean Michel Jarre, Kate Tempest, Kode 9, Modeselektor and Richie Hawtin. Also included were scientific and mathematical tutorials sent in binary code, designed to be understandable by extraterrestrials; a recording of an unborn baby girl's heartbeat; along with poetry and political statements about humans.

Due to the lag from light speed over a distance of 70 trillion miles, the earliest possible date for a response to arrive back would be 2042.*

Credit: Sonar

Depression is the number one global disease burden

When measured by years of life lost, depression has now overtaken heart disease to become the leading global disease burden.* This includes both years lived in a state of poor health and years lost due to premature death. Principle causes of depression include debt worries, unemployment, crime, violence (especially family violence), war, environmental degradation and disasters. The on-going economic stagnation around the world is a major contributing factor. However, progress is being made with destigmatising mental illness.*

Child mortality is approaching 2% globally

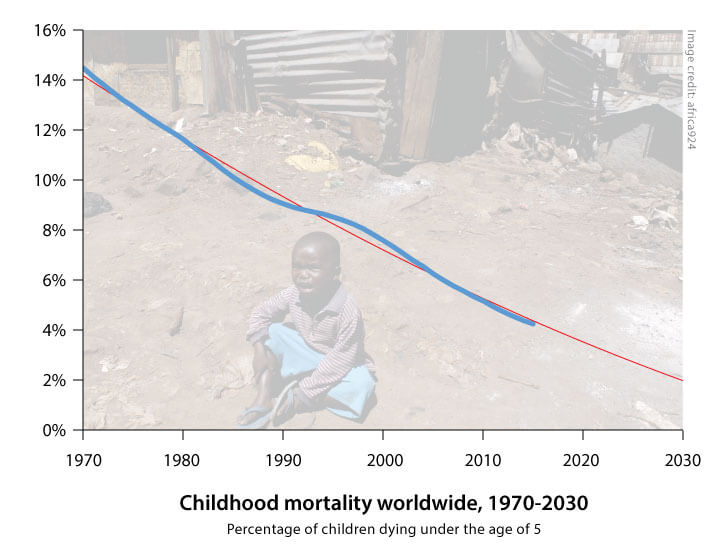

Childhood mortality, defined as the number of children dying under the age of five, was a major issue during the late 20th century. In 1970, more than 14% of children worldwide never saw their 5th birthday, while in Africa the figure was even higher at over 24%. The gap between rich and poor nations was staggering, with a mortality rate of only 24 per 1,000 live births in the most industrialised countries, an order of magnitude lower.*

Improvements in medicine, education, economic opportunity and living standards led to a fall in child deaths over subsequent decades. More and more children were being saved by low-tech, cost-effective, evidence-based measures. These included vaccines, antibiotics, micronutrient supplementation, insecticide-treated bed nets, improved family care and breastfeeding practices, and oral rehydration therapy. The empowerment of women, the removal of social and financial barriers to accessing basic services, new innovations that made the supply of critical services more available to the poor and increasing local accountability were policy interventions that reduced mortality and improved equity.

The U.N.'s Millennium Development Goals included the ambitious target of reducing by two-thirds (between 1990 and 2015) the number of children dying under age five. While this goal failed to be met in time, the progress achieved was still significant – a drop from 92 to 43 deaths per 1,000 live births. Public, private and non-profit organisations, keen to build on their experience and ensure the continuation of this trend, made childhood survival a focus of the new sustainable development agenda for 2030. A new objective was set, which aimed to lower the under-five mortality figure to less than 25 per 1,000 live births worldwide.*

With ongoing improvements in public health and education – aided by widespread access to the Internet in developing regions* – this new goal was largely met, with further declines in childhood mortality from 2015 to 2030. Although some regions in Africa still have unacceptably high rates, the overall worldwide figure is around 2% by 2030.*

One recent development now having a major impact is the mass application of gene drives to control mosquito populations, greatly reducing the number of malaria cases.* Huge advances have also been made in the prevention and treatment of HIV, which is no longer the death sentence it used to be. Some diseases have been eradicated by now including polio, Guinea worm, elephantiasis, river blindness, and blinding trachoma.*

However, the progress achieved in recent decades is now threatened by the worsening problems of climate change and other environmental issues, along with antibiotic resistance.* Even discounting these emerging threats, it is simply impractical and impossible to prevent every childhood death with current levels of technology and surveillance. As such, childhood mortality begins to taper off – not reaching zero until much further into the future.

The Muslim population has increased significantly

By 2030, the Muslim share of the global population has reached 26.4%. This compares with 19.1% in 1990.* Countries which have seen the largest growth rates include Ireland (190.7%), Canada (183.1%), Finland (150%), Norway (149.3%), New Zealand (146.3%) the United States (139.5%) and Sweden (120.2%). Those which have experienced the biggest falls include Lithuania (-33.3%), Moldova (-13.3%), Belarus (-10.5%), Japan (-7.6%), Guyana (-7.3%), Poland (-5.0%) and Hungary (-4.0%).

A number of factors have driven this trend. Firstly, Muslims have higher fertility rates (more children per woman) than non-Muslims. Secondly, a larger share of the Muslim population has entered – or is entering – the prime reproductive years (ages 15-29). Thirdly, health and economic gains in Muslim-majority countries have resulted in greater-than-average declines in child and infant mortality rates, with life expectancy improving faster too.

Despite an increasing share of the population, the overall rate of growth for Muslims has begun to slow when compared with earlier decades. Later this century, both Muslim and non-Muslim numbers will approach a plateau as the global population stabilises.* The spread of democracy* and improved access to education* are emerging as major factors in the slowing fertility rates (though Islam has yet to undergo the sort of renaissance and reformation that Christianity went through).

Sunni Muslims continue to make up the overwhelming majority (90%) of Muslims in 2030. The portion of the world's Muslims who are Shia has declined slightly, mainly because of relatively low fertility in Iran, where more than a third of the world's Shia Muslims live.

Full weather modelling is perfected

Zettaflop-scale computing is now available which is a thousand times more powerful than computers of 2020 and a million times more powerful than those of 2010. One field seeing particular benefit during this time is meteorology. Weather forecasts can be generated with 99% accuracy over a two week period.* Satellites can map wind and rain patterns in real time at phenomenal resolution – from square kilometres in previous decades, down to square metres with today's technology. Climate and sea level predictions can also be achieved with greater detail than ever before, offering greater certainty about the long-term outlook for the planet.

Orbital space junk is becoming a major problem for space flight

Space junk – debris left in orbit from human activities – has been steadily building in low-Earth orbit for more than 70 years. It is made up of everything from spent rocket stages, to defunct satellites, to debris left over from accidental collisions. The size of space junk can reach up to several metres, but is most often miniscule particles such as metal shavings and paint flecks. Despite their small size, such pieces of debris often sustain speeds of 30,000 mph – easily fast enough to deal significant damage to a spacecraft. Satellites, rockets and space stations, as well as astronauts conducting spacewalks, have all had to cope with the increasing damage caused by collisions with these particles.

One of the biggest issues with space junk is the fact that it grows exponentially. This trend, along with the increasing number of countries entering space, has made orbital collisions happen almost regularly in recent years. The newest space-faring nations have been particularly affected.

Events similar to the 2009 collision of the US Iridium and Russian Kosmos satellites have raised fears of the so-called Kessler Syndrome. This scenario is where space junk reaches a critical mass, triggering a chain reaction of collisions until virtually every satellite and man-made object in an orbital band has been reduced to debris. Such an event could destroy the global economy and render future space travel almost impossible.

By 2030, the amount of space junk in orbit has tripled, compared to 2011.* Countless millions of fragments can now be found at various levels of orbit. A new generation of shielding for spacecraft and rockets is being developed, along with tougher and more durable space suits for astronauts. This includes the use of "self-healing" nanotechnology materials, though expenses are too high to outfit everything.

Larger chunks of debris have also been impacting on Earth itself more frequently. Though most land in the ocean (since the planet's surface is 70% covered by water), a few crash on land, necessitating early warning systems for people in the affected areas.

Increased regulation has begun to mitigate the growth of space debris, while better shielding and repair technology has reduced the frequency of damage. Increased computing power and tracking systems are also helping to predict the path of debris and instruct spacecraft to avoid the most dangerous areas. Options to physically move debris are also being deployed – including nets and harpoons fired from small satellites, along with ground-based lasers that can push junk into decaying orbits so it burns up in the atmosphere. Despite this, space junk remains an expensive problem for now.

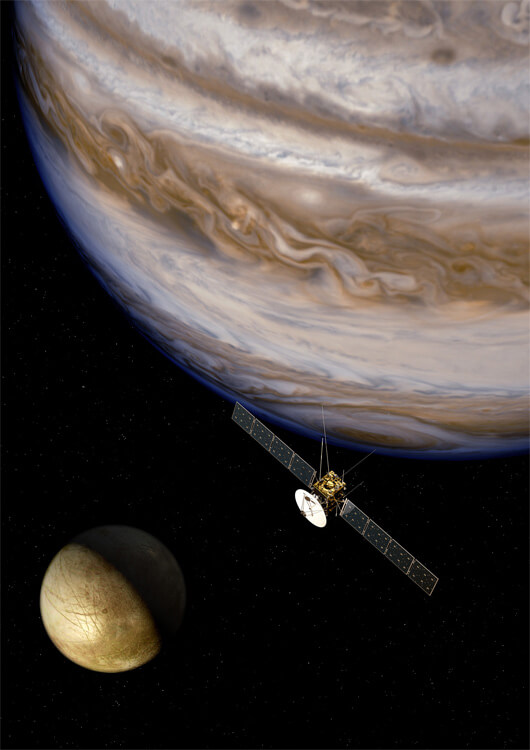

Jupiter Icy Moon Explorer (JUICE) reaches the Jovian system

Jupiter Icy Moon Explorer (JUICE) is a mission by the European Space Agency (ESA) to explore the Jovian system, focussing on the moons Ganymede, Callisto and Europa.* Launched in 2022, the craft goes through an Earth-Venus-Earth-Earth gravity assist, before finally arriving at Jupiter in 2030. JUICE initially studies Jupiter's atmosphere and magnetosphere, gaining valuable insight into how the gas giant might have originally formed.

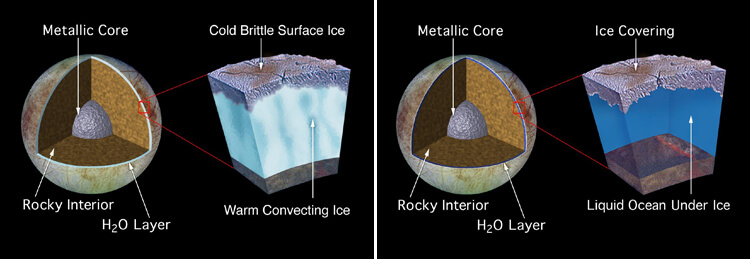

For its primary objective, the probe performs a series of flybys around some of the largest Galilean moons. Ganymede, Callisto and Europa are focussed on, since all are believed to hold subsurface liquid water oceans. JUICE records detailed images of Callisto (which has the most heavily cratered surface in the Solar System), while also taking the first complete measurements of Europa's icy crust and scanning for organic molecules that are essential to life.

Credit: ESA

In 2033, the probe enters orbit around Ganymede for the final phase of the mission. The detailed study includes:

- Characterisation of the different ocean layers, and detection of sub-surface water reservoirs

- Topographical, geological and compositional mapping of the surface

- Confirmation of the physical properties within the icy crust

- Characterisation of internal mass distribution

- Investigation of the exosphere (a tenuous outer atmosphere)

- Study of Ganymede's intrinsic magnetic field and its interactions with the Jovian magnetosphere

- Determining the moon's potential to support life

This final stage of the mission provides a vast wealth of empirical data.* When combined with new information from Callisto and Europa, it generates an extremely detailed picture of the Galilean moons. JUICE also studies possible locations for future surface landings. Indeed, various plans are now underway to further explore the Jovian system, with mission capabilities being enhanced by a new generation of cheaper and reusable rockets. This includes sample return missions and the first lander to drill down and explore the subsurface liquid oceans.*

Two possible models of Europa. Credit: NASA

The UK space industry has quadrupled in size

In 2010, the UK government established the United Kingdom Space Agency (UKSA). This replaced the British National Space Centre and took over responsibility for key budgets and policies on space exploration – representing the country in all negotiations on space matters and bringing together all civil space activities under one single management.

By 2014, the UK's thriving space sector was contributing over £9 billion ($15.2 billion) to the economy each year and directly employing 29,000 people, with an average growth rate of almost 7.5%. Recognising its strong potential, the government backed plans for a fourfold expansion of the industry.* Legal frameworks were created to allow the first spaceport to be established in the UK – spurring the growth of space tourism, launch services and other hi-tech companies. UKSA would also prove instrumental in making use of real-time data from Europe's new Galileo satellite navigation system.

By 2030, the UK has become a major player in the space industry, with a global market share of 10%. Having quadrupled in size, its space industry now contributes £40 billion ($67 billion) a year to the economy and has generated over 100,000 new high-skilled jobs.* The UK has significantly increased its leadership and influence in crucial areas like satellite communications, Earth observation, disaster relief and climate change monitoring. The growth of space-based products and services means the UK is now among the first 100% broadband-enabled countries in the world.* This has also reduced the costs of delivering Government services to all citizens, regardless of their location.

The Lockheed Martin SR-72 enters service

The SR-72 is an unmanned, hypersonic aircraft intended for intelligence, surveillance and reconnaissance. Developed by Lockheed Martin, it is the long-awaited successor to the SR-71 Blackbird that was retired in 1998. The plane combines both a traditional turbine and a scramjet to achieve speeds of Mach 6.0, making it twice as fast as the SR-71 and capable of crossing the Atlantic Ocean in under an hour. A scaled demonstrator was built and tested in 2018. This was followed by a full-size demonstrator in 2023 and then entry into service by 2030.* The SR-72 is similar in size to the SR-71, at approximately 100 ft (30 m) long. With an operational altitude of 80,000 feet (24,300 metres), combined with its speed, the SR-72 is almost impossible to shoot down.

Credit: Lockheed Martin

A new generation of military helicopters

For many years, the helicopters used by the US Air force had been essentially anachronistic. Though continually upgraded with new technology, the underlying design of helicopters in the 2000s and 2010s was the same as it had been for decades.

In America's modern wars, helicopters primarily served in transport, reconnaissance and supply roles. This remains true today. However, the Air Force is now finally implementing a new fleet, taking over from the aging Blackhawk and Chinook.**

While aircraft are fielded from a variety of size classes, the most prominent additions are the Joint Multi-Role (JMR) rotorcraft and the Joint Heavy Lift (JHL) rotorcraft. The JMR rotorcraft is designed with a propulsion method similar to that of the V-22 Osprey of earlier decades. The tilt-rotor design allows for both vertical take-offs and forward thrust flight. It can sustain speeds of over 200mph, with a combat range of about 1,000 miles and maximum altitude of 6,000 feet.* Along with traditional combat operations, the JMR rotorcraft is used in a wide variety of roles including reconnaissance, search-and-rescue, medevac, transport, anti-submarine warfare and others. Production of the craft will continue throughout the 2030s, fully replacing the Black Hawk when it retires in 2038.

Joint Multi-Role (JMR) rotorcraft, circa 2030. Image courtesy of U.S. Army

Alongside the JMR rotorcraft is the Joint Heavy Lift (JHL) rotorcraft, a major addition to the fleet. Utilising a similar tilt-rotor design, it is capable of speeds up to 290mph (when the engines are in the horizontal turboprop position), with a range of 600 miles, and is able to carry a payload of 25 tons. This makes it a viable alternative for the airforce's C-130J Super Hercules transport aircraft.

Joint Heavy Lift (JHL) rotorcraft, circa 2030. Image courtesy of U.S. Army

Both aircraft, along with the other models now entering service, are optionally-manned. The JMR in particular makes use of this – able to work in large, semi-autonomous squadrons. Onboard computers manage the data gathered from a myriad of sensors, keeping the aircraft in formation at safe distances while monitoring altitude and weather.

For combat roles, the JMR may still use human pilots, but remote control is becoming more popular. In less complex missions, such as for transport, general flight instructions are usually entered into the flight-computer, allowing for essentially autonomous flight. The same is true for the Joint Heavy Lift rotorcraft. Internal sensors monitor for even the slightest damage. Repairs are regularly made in flight, often with self-healing materials. Ground repair is usually done with robots, making human intervention largely unnecessary.

Hyper-fast crime scene analysis

Crime scene analysis and forensic science have become extraordinarily rapid and sophisticated, thanks to the convergence of a bewildering array of technologies. Investigations that might have taken hours, days or weeks in earlier decades can now be completed in a matter of seconds.

On-person technology has turned the average FBI agent into a walking laboratory. Advanced augmented reality and powerful AI, combined with ultra-fast broadband and cloud networks, allow crime scenes to be viewed in unprecedented new ways. Details can be picked out of surroundings simply by looking around. This may include biological evidence – such as blood, hair or fingerprints, footprints, tire tracks, and even particulates in the air. Massive online databases can be accessed in the field, to compare any relevant findings.* Facial recognition, combined with online criminal records, allows full instant profiles to be generated on a suspect through an officer's augmented field of vision. New AI programs can identify any suspicious behaviour or familiar faces.*

DNA scanning in particular has seen major breakthroughs in recent years. The rate of genome sequencing has grown so rapidly that the equivalent of the entire Human Genome Project can be performed almost instantly,** using special touch-sensitive gloves. Plant and animal DNA from millions of different species can also be identified in addition to that of humans. New algorithms have been introduced to analyse the vast amount of genomic data and pick out specific genomes.

Near-instant sequencing of genomes on industrial-sized machines had already begun to emerge in the latter half of the 2010s. However, there remained the problem of accuracy (machines still had error rates) and portability. Successive generations of nanotechnology gradually reduced the cost, time and equipment required.** By 2030, sequencing is available with negligible cost, very high accuracy, hand-held portability and vast online databases for comparing victim and suspect information in precise detail. When combing a crime scene, it is even possible to identify a face using DNA evidence alone.*

Half of America's shopping malls have closed

For much of the 20th century, shopping malls were an intrinsic part of American culture. At their peak in the mid-1990s, the country was building 140 new shopping malls every year. But from the early 2000s onward, underperforming and vacant malls – known as "greyfield" and "dead mall" estates – became an emerging problem. In 2007, a year before the Great Recession, no new malls were built in America, for the first time in half a century. Only a single new mall, City Creek Center Mall in Salt Lake City, was built between 2007 and 2012. The economic health of surviving malls continued to decline, with high vacancy rates creating an oversupply glut.*

A number of changes had occurred in shopping and driving habits. More and more people were living in cities, with fewer interested in driving and people in general spending less than before. Tech-savvy Millennials (also known as Generation Y), in particular, had embraced new ways of living. The Internet had made it far easier to identify the cheapest products and to order items without having to be physically there in person. In earlier decades, this had mostly affected digital goods such as music, books and videos, which could be obtained in a matter of seconds – but even clothing was eventually possible to download, thanks to the widespread proliferation of 3D printing in the home.* Many of these abandoned malls are now being converted to other uses, such as housing.

Emerging job titles of today

Some of the new job titles becoming widespread in 2030 include the following.*

- Alternative Vehicle Developer

- Avatar Manager / Devotee

- Body Part Maker

- Climate Change Reversal Specialist

- Memory Augmentation Surgeon

- Nano Medic

- Narrowcaster

- 'New Science' Ethicist

- Old Age Wellness Manager / Consultant Specialist

- Quarantine Enforcer

- Social 'Networking' Officer

- Space Pilot / Orbital Tour Guide

- Vertical Farmer

- Virtual Clutter Organizer

- Virtual Lawyer

- Virtual Teacher

- Waste Data Handler

Cargo Sous Terrain becomes operational in Switzerland

The Cargo Sous Terrain is an underground, automated system of freight transport that becomes operational in Switzerland from 2030 onwards.* It is designed to mitigate the increasing problem of road traffic, which has grown by 45% in the region since the mid-2010s. This tube network, including the self-driving carts and transfer stations, is built at a cost of $3.4 billion and is privately financed. The entire project is powered by renewables.

An initial pilot tunnel is constructed 50 metres below ground, with a total length of 41 miles (66 km). This connects Zurich, the largest city in Switzerland, with logistics centres near Bern (the capital) in the west. The route includes four above-ground waystations that enable cargo transfers. The pilot tunnel is followed by an expanded network that links Zurich with Lucerne and eventually Geneva, spanning the entire width of the country.

The unmanned, automated vehicles are propelled by electromagnetic induction and run at 19 mph (30 km/hour), operating 24 hours a day. An additional monorail system for packages, in the roof of the tunnel, moves at twice this speed. The Cargo Sous Terrain allows goods to be delivered more efficiently, at more regular intervals, while cutting air and noise pollution, as well as reducing the burden of traffic on overground roads and freight trains.***

The entire ocean floor is mapped

While humans had long ago conquered the Earth's land masses, the deep oceans lay mostly unexplored. In the early years of the 21st century, only 20% of the global ocean floor had been mapped in detail. Even the surfaces of the Moon, Mars and other planets were better understood. With data now becoming as important a commodity as oil, researchers set out to acquire knowledge of the remaining 80% and uncover a potential treasure trove of hidden information.

Seabed 2030 – a collaborative project between the Nippon Foundation of Japan and the General Bathymetric Chart of the Oceans (GEBCO) – aimed to bring together all available bathymetric data to produce a definitive map of the world ocean floor by 2030.

As part of the effort, fleets of automated vessels capable of trans-oceanic journeys would cover millions of square miles, carrying with them an array of sensors and other technology. These uncrewed ships, monitored by a control centre in Southampton, UK, would deploy tethered robots to inspect points of interest all the way down to the floor of the ocean, thousands of metres below the surface.

By 2030, the project is largely complete.** The maps provide a wealth of new information on the global seabed, revealing its geology in unprecedented detail and showing the location of ecological hotspots, as well as many shipwrecks, crashed planes, archaeological artefacts and other unique and interesting sites. Commercial applications include the inspection of pipelines, and surveying of bed conditions for telecoms cables, offshore wind farms and so on. However, concerns are raised over the potential impact of new undersea mining technology, the opportunities for which are now greatly increased.

Post a Comment